While text-to-image AI tools such as Midjourney and Dall-E advance and become more and more perfected, Runway‘s recently launched text-to-video AI tool, Gen-2, appears to be the logical progression in Gen-AI technology.

It turns once surreal and static images into dynamic, realistic videos. Runway claims that “if you can say it, now you can see it,” and the program lives up to its promise. The software analyzes written prompts and produces videos based on the user’s input within minutes, if not seconds.

The software doubles as a video-ready production tool, making it possible to create new, realistic videos without the need for heavy equipment or complex settings.

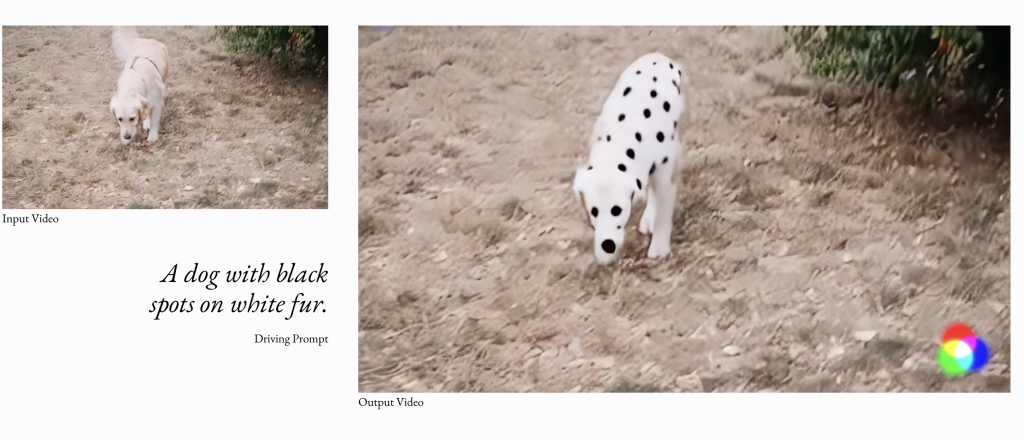

Gen-2 creates more realistic and surreal videos than its predecessor, Gen-1. The sharper pixel quality is noticeable, but the videos’ GIF-like flow may trick viewers into thinking that they were created by a human. The unusual characters spotlighted in the videos, thanks to the users’ input, also serve as a hint that the videos were created using AI technology.

“Deep neural networks for image and video synthesis are becoming increasingly precise, realistic, and controllable. In a couple of years, we have gone from blurry low-resolution images to both highly realistic and aesthetic imagery allowing for the rise of synthetic media… We believe that deep learning techniques applied to audiovisual content will forever change art, creativity, and design tools,” the company said in a statement.

With its ability to turn written words into realistic, dynamic video content, Gen-2 has the potential to revolutionize the video production industry.