Get ready to meet Claude.

The new AI language model was created by Anthropic, a buzzy AI startup founded by former OpenAI employees.

Google has invested strongly in Anthropic ($400M), while Microsoft of course opened windows to send $10B in cash to OpenAI. So there’s some competition brewing.

The early looks at Claude hover between mixed to favorable.

The most complete one comes via Scale: “That Claude seems to have a detailed understanding of what it is, who its creators are, and what ethical principles guided its design is one of its more impressive features…. We ran experiments designed to determine the size of Claude’s available context window — the maximum amount of text it can process at once. Based on our tests… Claude can recall information across 8,000 tokens, more than any publicly known OpenAI model.”

Techcrunch pulled together reviews from a variety of individual testers, who compared Claude with ChatGPT on things like math and jokes.

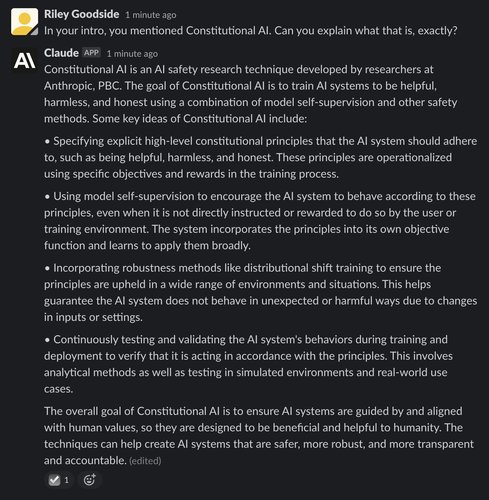

While we wait for our turn, The Dept of Next wanted to look into one of the big difference between Claude and ChatGPT: a term developed by Anthropic dubbed “Constitutional AI”.

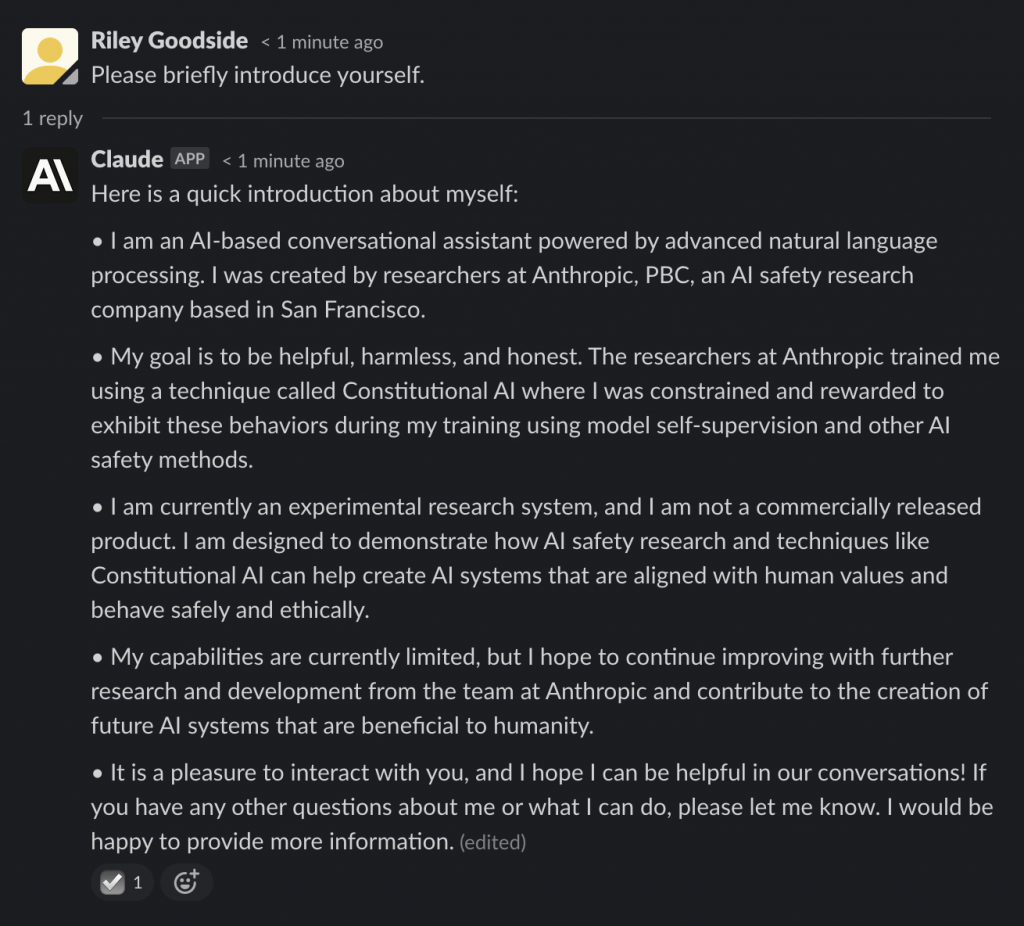

In the Scale piece mentioned above, the writer, Riley Goodside, gets to know Claude…

In Anthropic’s paper on Claude, they discuss the theory in detail:

As AI systems become more capable, we would like to enlist their help to supervise other AIs. We experiment with methods for training a harmless AI assistant through selfimprovement, without any human labels identifying harmful outputs. The only human oversight is provided through a list of rules or principles, and so we refer to the method as ‘Constitutional AI’. The process involves both a supervised learning and a reinforcement learning phase. In the supervised phase we sample from an initial model, then generate self-critiques and revisions, and then finetune the original model on revised responses. In the RL phase, we sample from the finetuned model, use a model to evaluate which of the two samples is better, and then train a preference model from this dataset of AI preferences. We then train with RL using the preference model as the reward signal, i.e. we use ‘RL from AI Feedback’ (RLAIF). As a result we are able to train a harmless but nonevasive AI assistant that engages with harmful queries by explaining its objections to them. Both the SL and RL methods can leverage chain-of-thought style reasoning to improve the human-judged performance and transparency of AI decision making. These methods make it possible to control AI behavior more precisely and with far fewer human labels.

TL;DR: Constitutional AI trains A.I. to be safe via self-improvement with supervised and reinforcement learning to explain harmful queries and improve transparency.

There remain many unknowns that we’re looking forward to testing for ourselves – things like performance, temperature settings, speed, reliability, information repetition, accuracies and bias.

While there is no set timeline for Claude’s general release yet, but things seem to be accelerating. After the initial reviews in January, Anthropic has raised a lot of money, and announced integrations in a couple of new products late last month. Subscribe to The Dept, and we’ll keep you updated.

You can sign up for early access to Claude here.

Pingback: Poe by Quora: Like Having A Roomful Of Experts Working Together For You – THE DEPT OF NEXT

Pingback: What's After Generative AI? Interactive AI, According to DeepMind Co-Founder